OpenAI's DALL-E turns weird text into weird images

In cursory: OpenAI wants to create a general artificial intelligence (AGI) that benefits all of humanity, and that includes existence able to understand everyday concepts and alloy them in creative means. The visitor's latest AI models combine natural language processing with epitome recognition and prove promising results towards that goal.

OpenAI is known for developing impressive AI models similar GPT-two and GPT-3, which are capable of writing believable imitation news but tin also get essential tools in detecting and filtering online misinformation and spam. Previously, they've also created bots that tin beat human opponents in games like Dota ii, as they can play in a style that would require thousands of years worth of training.

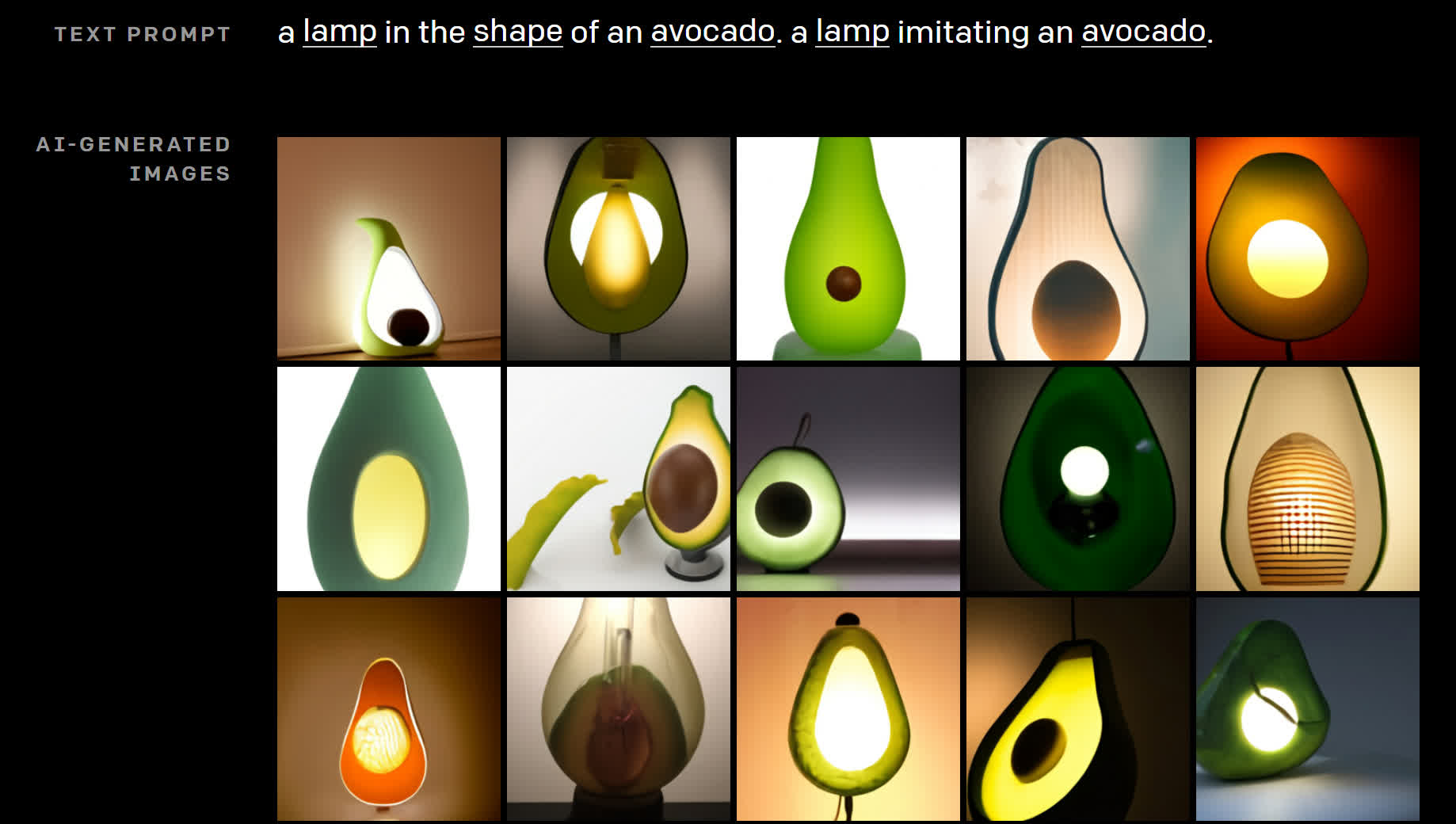

The research group has come with two boosted models that build on that foundation. The showtime called DALL-E is a neural network that can essentially create an prototype based on text input. OpenAI co-founder and chief scientist Ilya Sutskever notes that with its 12 billion parameters, DALL-E is capable of creating almost annihilation you tin can describe, even concepts that it would never take seen in training.

For example, the new AI organization is able to generate an image that represents "an illustration of a baby daikon radish in a tutu walking a dog," "a stained glass window with an epitome of a bluish strawberry," "an armchair in the shape of an avocado," or "a snail fabricated of a harp."

DALL-Eastward is able to generate several plausible results for these descriptions and many more than, which shows that manipulating visual concepts through the use of natural language is now within reach.

Sutskever says that "work involving generative models has the potential for significant, broad societal impacts. In the time to come, we program to analyze how models like DALL-E chronicle to societal issues similar economic touch on certain work processes and professions, the potential for bias in the model outputs, and the longer-term ethical challenges implied past this technology."

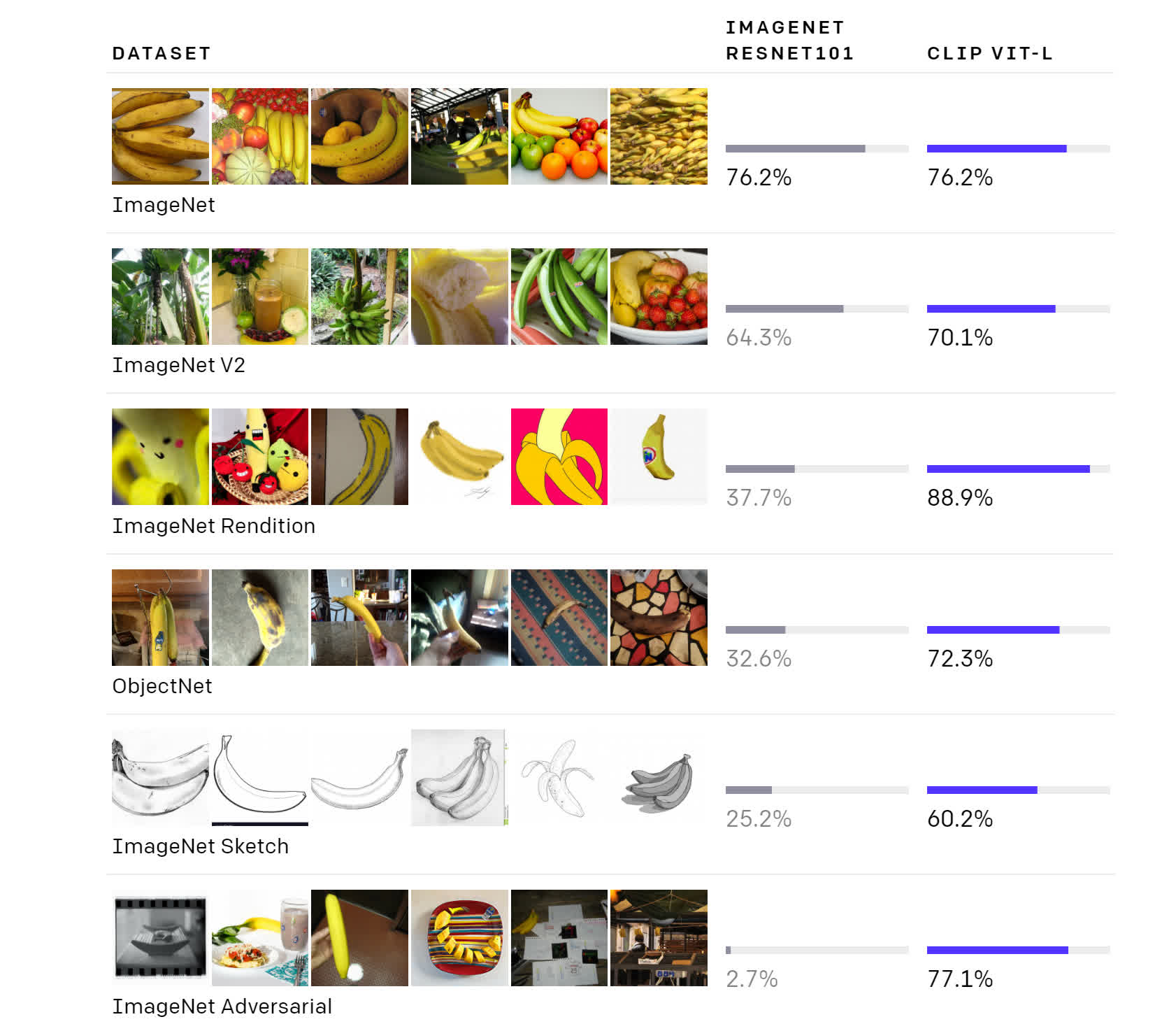

The second multimodal AI model introduced by OpenAI is called Prune. Trained on no less than 400 million pairs of text and images scraped from effectually the web, CLIP's strength is its power to take a visual concept and find the text description that's most probable to exist an authentic description of it using very trivial preparation.

This tin reduce the computational toll of AI in certain applications like object graphic symbol recognition (OCR), action recognition, and geo-localization. However, researchers found information technology savage short in other tasks similar lymph node tumor detection and satellite imagery classification.

Ultimately, both DALL-E and Clip were congenital to give language models like GPT-3 a meliorate grasp of everyday concepts that we use to understand the world around us, even as they're still far from perfect. It'southward an important milestone for AI, which could pave the manner to many useful tools that will augment humans in their work.

Source: https://www.techspot.com/news/88191-openai-dall-e-turns-weird-text-weird-images.html

Posted by: crusedowasobod.blogspot.com

0 Response to "OpenAI's DALL-E turns weird text into weird images"

Post a Comment